Australia lagging behind on big tech as disinformation spreads like wildfire on our democracy

Millions of Australians rely on social media networks like TikTok, Facebook, Instagram and Twitter for staying up to date with news, friends, family and the wider world. But these powerful big tech platforms are driving the spread of disinformation and hate speech like a wildfire engulfing Australia’s democracy.

While Australia has been an early mover on reform for online safety and digital media, it lags on key aspects of regulating digital platforms.

The Human Rights Law Centre has provided a submission to the parliamentary inquiry into the influence of international digital platforms, calling for three key recommendations:

1. The Federal Government should move away from self-regulatory and co-regulatory models for digital platforms, by replacing existing co-regulatory codes and ensuring new regulations are written by legislators or regulators.

2. The Federal Government should introduce a comprehensive digital regulatory framework for Australia, focused on transparency and risks arising from platforms’ systems and processes. This should include:

- requirements for major platforms to undertake and publish risk assessments that identify human rights risks and other forms of harm, with corresponding obligations to develop and implement mitigation measures;

- a data access regime to support civil society research and enhance transparency;

- measures to give users greater control over the collection and use of their personal data, including by making recommender systems opt-in and by limiting forms of profiling;

- broad information-gathering and enforcement powers for an independent, well-resourced and integrated regulator.

3. Parliament should consider mechanisms for enhancing parliamentary scrutiny of digital regulation, such as the establishment of a dedicated committee for digital affairs, and for improving the coordination of tech policy across government.

Scott Cosgriff, Senior Lawyer at the Human Rights Law Centre said:

“Technology should serve communities, not put people at risk. Australia’s regulatory framework for digital platforms should be centred around the protection of human rights and community interests.

“Big tech digital platforms are allowing disinformation to spread like wildfire in our democracy. Instead of the lax, voluntary and ineffective self-regulation measures currently in place, we need laws to make digital platforms more transparent and accountable.

“As long as business as usual continues for big tech, Australian people and our human rights will be under threat. But disinformation online is a democratic problem with a democratic solution. We should have greater control over our own data and we need greater transparency around why certain content floods our feeds. We need comprehensive laws and checks in place to limit the amplification of disinformation that causes harm and undermines trust in institutions.”

Media Contact:

Thomas Feng

Human Rights Law Centre

Media and Communications Manager

thomas.feng@hrlc.org.au

0431 285 275

Media Enquiries

Chandi Bates

Media and Communications Manager

Albanese Government must act on whistleblower reform as David McBride’s appeal dismissed

The Human Rights Law Centre, the Alliance for Journalists’ Freedom and the Whistleblower Justice Fund are calling on the Albanese Government to act on urgent, robust whistleblower protection reform, after war crimes whistleblower David McBride’s appeal was dismissed today.

Read more

Tax whistleblower Richard Boyle’s guilty plea an indictment on Australia’s broken whistleblowing laws

The Human Rights Law Centre and the Whistleblower Justice Fund have condemned the Albanese Government’s ongoing prosecution of Richard Boyle, as the tax office whistleblower pleaded guilty at a hearing in Adelaide today.

Read more

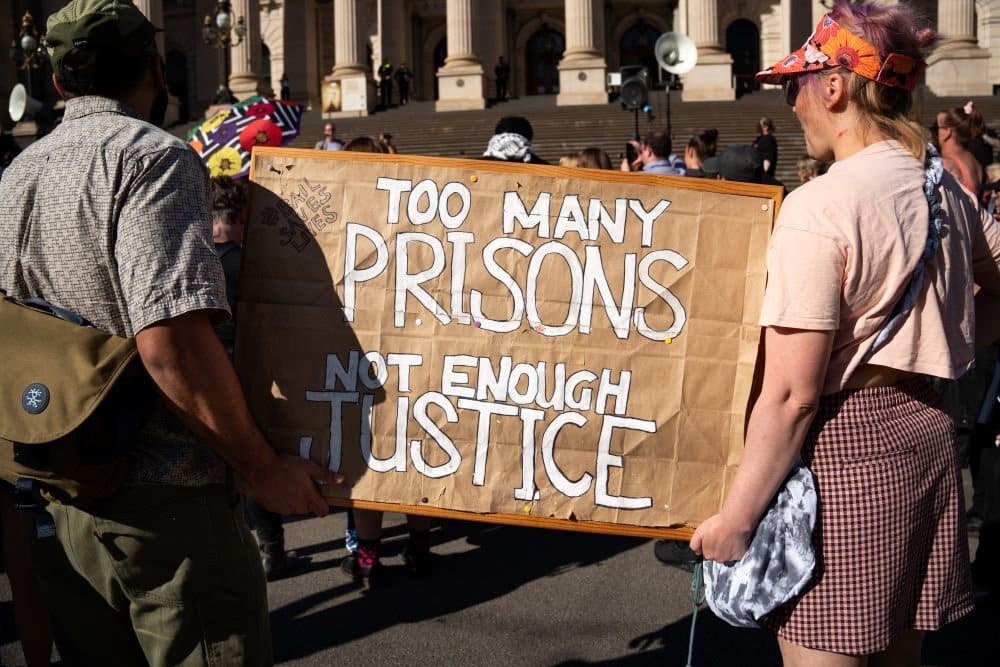

Crisafulli Government’s shameful adult sentencing laws will harm kids, families, and communities

The Human Rights Law Centre and Change the Record have slammed the Crisafulli Government for passing laws that will sentence even more children to adult-length terms of imprisonment. The laws will lock up children for even longer, and harm kids, families, and communities.

Read more